First, I just want to say that I really like

Chisel. As a software developer who has dabbled in creating hardware only occasionally so far, I've found it much more enjoyable to learn and use than Verilog. Though my knowledge of both languages is still limited, Chisel's focus on hardware generation rather than description satisfies my desire to parameterize and automate everything in sight. The learning process for Chisel was much improved with the extensive documentation, including the Bootcamp and API docs. The abundance of open-source Chisel projects also provide valuable examples of how to use certain features. The basic building blocks provided by the language and utilities make it easy to get started creating hardware from scratch. The testing facilities that come standard also enable verification at an early stage, which appeals to me as a Test-Driven Development fanatic. Finally, Scala is a powerful language with many conveniences for writing clear and concise code.

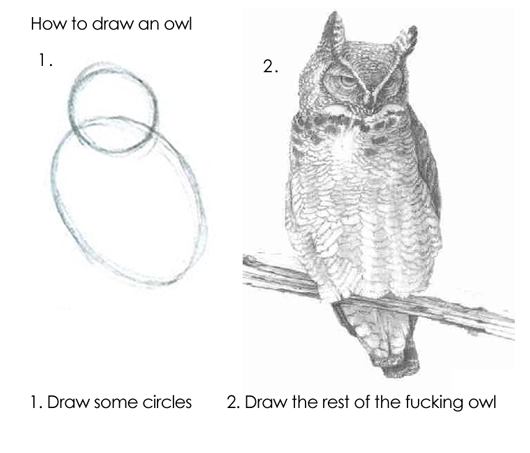

All of that being said, there are difficulties with hardware design that even Chisel does not fully address. The simple circuits that are taught in tutorials and bootcamps are fine for getting off the ground, but there is a big gap between those and what's required to create a processor or any block that's part of a larger system. Interfaces between memories and caches and other blocks require synchronization or diplomacy, which involves keeping track of valid and/or ready signals in addition to internal state. Improving performance with techniques such as pipelining or parallelization increase the number of elements that have to work together at all times. Then the design becomes significantly more complex, especially for software developers, such as myself, who write mostly procedural programs. The concurrency of hardware is a hard thing to wrap your head around. This is a problem because creating a processor is a logical first goal of a new designer. The abundance of open specifications, toolchains, and compatible software make processors a rewarding project. We don't want to just design hardware but also want to use them to run programs written by ourselves and others. So it feels like there's an owl-drawing problem, where the circles are the existing languages and documentation, and the owl is the processor.

So we have to add in more of these steps and take hardware generators a step further. Queues and shift registers are useful, but Chisel libraries should continue beyond those to offer frameworks that conform to the user's ultimate requirements more easily. dsptools is one step in the right direction, with its ready-made traits for adding interfaces for a variety of bus protocols. So just like for busses, the transition between processor specification and implementation must be made easier. Thankfully, instruction set specifications all have somewhat similar contents and format: the user visible state, and the instruction encodings and their effects on the state and IO. It should be possible to harness the power of Chisel to generate a processor given a specification pattern that resembles these documents.

That's exactly what I'm attempting to do with

the ProcessingModule framework. In it, a set of instructions and common, user-visible logic elements are defined. Instructions will declare their dependencies on state and/or external resources. An instruction also specifies what action will be done with those resources once they are available. Both sequential and combinational elements can be shared among instructions. Sequential elements can be general purpose register files or control/status registers. Large combinational elements like ALUs can also be shared to improve resource usage of the processor. The implementation will require parameters for data, instruction, and address widths, but there will eventually be more options to insert structural features like pipelining, speculation, and/or instruction re-ordering that improve processor performance but at the cost of additional hardware resources. The framework inherits from Module and features standard Decoupled and Valid interfaces, so it mixes in well with other Chisel code.

abstract class ProcessingModule(dWidth : Int, dAddrWidth : Int, iWidth : Int, queueDepth : Int) extends Module {

val io = IO(new Bundle {

val instr = new Bundle {

val in = Flipped(util.Decoupled(UInt(iWidth.W)))

val pc = util.Valid(UInt(64.W))

}

val data = new Bundle {

val in = Flipped(util.Decoupled(UInt(dWidth.W)))

val out = new Bundle {

val addr = util.Valid(UInt(dAddrWidth.W))

val value = util.Decoupled(UInt(dWidth.W))

}

}

});

def initInstrs : Instructions

…

}

This is the beginning of the

ProcessingModule class. First, there are constructor parameters for widths of data loaded and stored from memory, data memory addresses, and instructions. There is also a parameter to specify the depth of a queue that receives incoming instructions. Following that is a basic IO assembly that's divided into instruction and data bundles. The instruction part has a decoupled port for the incoming instructions and an output port for the program counter. The program counter is currently fixed to 64 bits wide, but that will be made parameterizeable in the future. The data bundle has a Decoupled input port and output address and value ports. Following the IO is the one abstract method called

initInstrs, which will be called only once later in the constructor. Modules that inherit from

ProcessingModule must implement this method to return the logic and instruction set they want to use. The return type is another abstract class called

Instructions.

abstract class Instructions {

def logic : Seq[InstructionLogic]

}

abstract class InstructionLogic (val name : String, val dataInDepend : Boolean, val dataOutDepend : Boolean) {

def decode( instr : UInt) : Bool

def load(instr : UInt) : UInt = 0.U

def execute( instr : UInt) : Unit

def store(instr : UInt) : UInt = 0.U

}

Subclasses of

Instructions should declare logic shared between instructions in their constructor. The logic method also needs to be defined to return a sequence of

InstructionLogic. Each instance of the

InstructionLogic class represents an instruction. There are parameters for the instruction name and whether or not it depends on memory. The name field currently only exists to distinguish instructions in the Chisel code, but it will eventually prove useful for automatically generating debugging utilities for simulation. The

dataInDepend and

dataOutDepend parameters should be set to true if the instruction will read from or write to memory respectively. The virtual methods within the

InstructionLogic class roughly correspond to the stages in a traditional pipelined processor architecture.

decode and

execute are required to be implemented by all instructions.

decode takes a value given to the processor via the instruction bus and outputs a high value if its a match for this particular instruction type. Then, the rest of the stages will be run for that

InstructionLogic instance.

execute takes the same instruction value and performs some operation on the processor state. It does not return any value. If a memory dependency exists for the instruction, then the

load and/or

store methods will also be called, so they should also be implemented by the designer. Both of these methods should return the address in memory that should be accessed. For instructions that read from memory, the value in memory at that address will be retrieved and stored in the

dataIn register. For instructions that store to memory, the value in the

dataOut register will be stored at the given address.

Following is a simple example of a processor that simply adds numbers to a pair of registers. Some common routines for extracting subfields from an instruction are defined at the top. In the

initInstrs method, an

Instructions instance is created with the 2-element register array that's accessible to all instructions. Then the logic method begins by defining a

nop instruction, which contains only logic that indicates if the current instruction is a

nop or not. There is no logic defined in the

execute method, because the instruction does not do anything.

class AdderModule(dWidth : Int) extends ProcessingModule(dWidth, AdderInstruction.addrWidth, AdderInstruction.width, 3) {

def getInstrCode(instr : UInt) : UInt = instr(2,0)

def getInstrReg(instr : UInt) : UInt = instr(3)

def getInstrAddr(instr : UInt) : UInt = instr(7,4)

def initInstrs = new Instructions {

val regs = RegInit(VecInit(Seq.fill(2){ 0.U(dWidth.W) }))

def logic = {

new InstructionLogic("nop", dataInDepend=false, dataOutDepend=false) {

def decode ( instr : UInt ) : Bool = getInstrCode(instr) === AdderInstruction.codeNOP

def execute ( instr : UInt ) : Unit = Unit

} ::

new InstructionLogic("incrData", dataInDepend=true, dataOutDepend=false) {

…

}

…

}

}

}

The next instruction,

incr1, increments the specified register by 1. The register to increment is determined from a subfield in the instruction, which is extracted in the execute stage with the

getInstrReg method defined above.

new InstructionLogic("incr1", dataInDepend=false, dataOutDepend=false) {

def decode ( instr : UInt ) : Bool = {

getInstrCode(instr) === AdderInstruction.codeIncr1

}

def execute ( instr : UInt ) : Unit = {

regs(getInstrReg(instr)) := regs(getInstrReg(instr)) + 1.U

}

}

The

incrData instruction increments a register by a number stored in memory. The

dataInDepend parameter for this instruction is set to true since it needs to read from memory. The

logic method is implemented here to provide the address to read from, which also comes from a subfield of the instruction. The value from memory is then automatically stored in the built-in

dataIn register, which is used in the

execute method.

new InstructionLogic("incrData", dataInDepend=true, dataOutDepend=false) {

def decode ( instr : UInt ) : Bool = {

getInstrCode(instr) === AdderInstruction.codeIncrData

}

override def load ( instr : UInt ) : UInt = getInstrAddr(instr)

def execute ( instr : UInt ) : Unit = {

regs(getInstrReg(instr)) := regs(getInstrReg(instr)) + dataIn

}

}

The

store instruction stores a register value to memory, and thus has its

dataOutDepend parameter set to

true. The

dataOut register is written in the

execute method. The value in the

dataOut register will be stored at the address returned by the

store method.

new InstructionLogic("store", dataInDepend=false, dataOutDepend=true) {

def decode ( instr : UInt ) : Bool = {

getInstrCode(instr) === AdderInstruction.codeStore

}

def execute ( instr : UInt ) : Unit = {

dataOut := regs(getInstrReg(instr))

}

override def store ( instr : UInt ) : UInt = getInstrAddr(instr)

}

bgt (Branch if Greater Than) skips the next instruction if the specified register is greater than zero. This is implemented by adding 2 to the built-in

pcReg register in the

execute method.

new InstructionLogic("bgt", dataInDepend=false, dataOutDepend=false) {

def decode ( instr : UInt ) : Bool = {

getInstrCode(instr) === AdderInstruction.codeBGT

}

def execute ( instr : UInt ) : Unit = {

when ( regs(getInstrReg(instr)) > 0.U ) { pcReg.bits := pcReg.bits + 2.U }

}

}

Testing

ProcessingModule began with the

OrderedDecoupledHWIOTester from the iotesters package. The class makes it easy to define a sequence of input and output events without having to explicitly define the exact number of cycles to advance or which ports to peek and poke at. The logging abilities also enable some debugging without having to inspect waveforms. Even with these advantagges, I found it lacking in some aspects and even encountered a bug that hindered my progress for several days. Therefore, I created my own version of the class called

DecoupledTester. This new class orders input and output events together instead of executing all input events immediately. By default, it fails the test when the maximum tick count is exceeded, which usually happens if the design under test incorrectly blocks on an input.

DecouledTester also automatically initializes all design inputs, thus decreasing test sizes and elaborating errors. Finally, the log messages emitted by tests are slightly more verbose and clearly formatted. The following is an example of a test written for the

AdderModule described above:

it should "increment by 1" in {

assertTesterPasses {

new DecoupledTester("incr1"){

val dut = Module(new AdderModule(dWidth))

val events = new OutputEvent((dut.io.instr.pc, 0)) ::

new InputEvent((dut.io.instr.in, AdderInstruction.createInt(codeIncr1, regVal=0.U))) ::

new OutputEvent((dut.io.instr.pc, 1)) ::

new InputEvent((dut.io.instr.in, AdderInstruction.createInt(codeStore, regVal=0.U))) ::

new OutputEvent((dut.io.data.out.value, 1)) ::

Nil

}

}

}

This is an example of output from the test when the design is implemented correctly:

Waiting for event 0: instr.pc = 0

Waiting for event 1: instr.in = 1

Waiting for event 2: instr.pc = 1

Waiting for event 2: instr.pc = 1

Waiting for event 2: instr.pc = 1

Waiting for event 3: instr.in = 3

Waiting for event 4: data.out.value = 1

Waiting for event 4: data.out.value = 1

Waiting for event 4: data.out.value = 1

All events completed!

The framework does well enough for the simple examples explained here, but my next goal is to prove its utility with a "real" instruction set. The first target is RISC-V, followed by other open architectures like POWER and OpenRISC. In parallel to these projects, I'll work on improving the designer interface of the framework by reducing boilerplate code and enhancing debugging capabilities. Once some basic processor implementations have been written and tested, there will be enhancements to improve performance by pipelining, branch prediction, and instruction re-ordering. In the meantime, here are

the slides for this presentation and

a PDF containing the AdderModule example that fits on a 6x4 inch flash card.